bagging machine learning explained

Batch gradient descent refers to calculating the derivative from all training data before calculating an. Machine Learning Refined.

Bagging Vs Boosting In Machine Learning Geeksforgeeks

In a very layman manner Machine LearningML can be explained as automating and improving the learning process of.

. It is seen as a part of artificial intelligenceMachine learning algorithms build a model based on sample data known as training data in order to make predictions or decisions without being explicitly. Random Forest is one of the most popular and most powerful machine learning algorithms. Through a series of recent breakthroughs deep learning has boosted the entire field of machine learning.

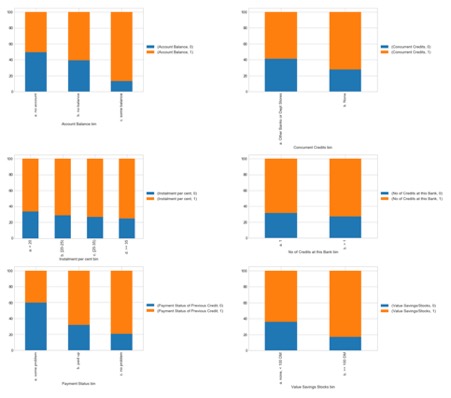

Different blue dots represent the throw made to hit the target value. The simplest way to do this would be to use a library called mlxtend machine learning extension which is targeted for data science tasks. Machine learning model bias and variance explained.

The center of the dartboard in red represents the target or target value when speaking in ML terms. The trade-off between high variance and high bias is a very important concept in statistics and Machine Learning. Arthur Samuel a pioneer in the field of artificial intelligence and computer gaming coined the term Machine LearningHe defined machine learning as Field of study that gives computers the capability to learn without being explicitly programmed.

Second-Order Optimization Techniques Chapter 5. Zero-Order Optimization Techniques Chapter 3. Bias variance calculation example.

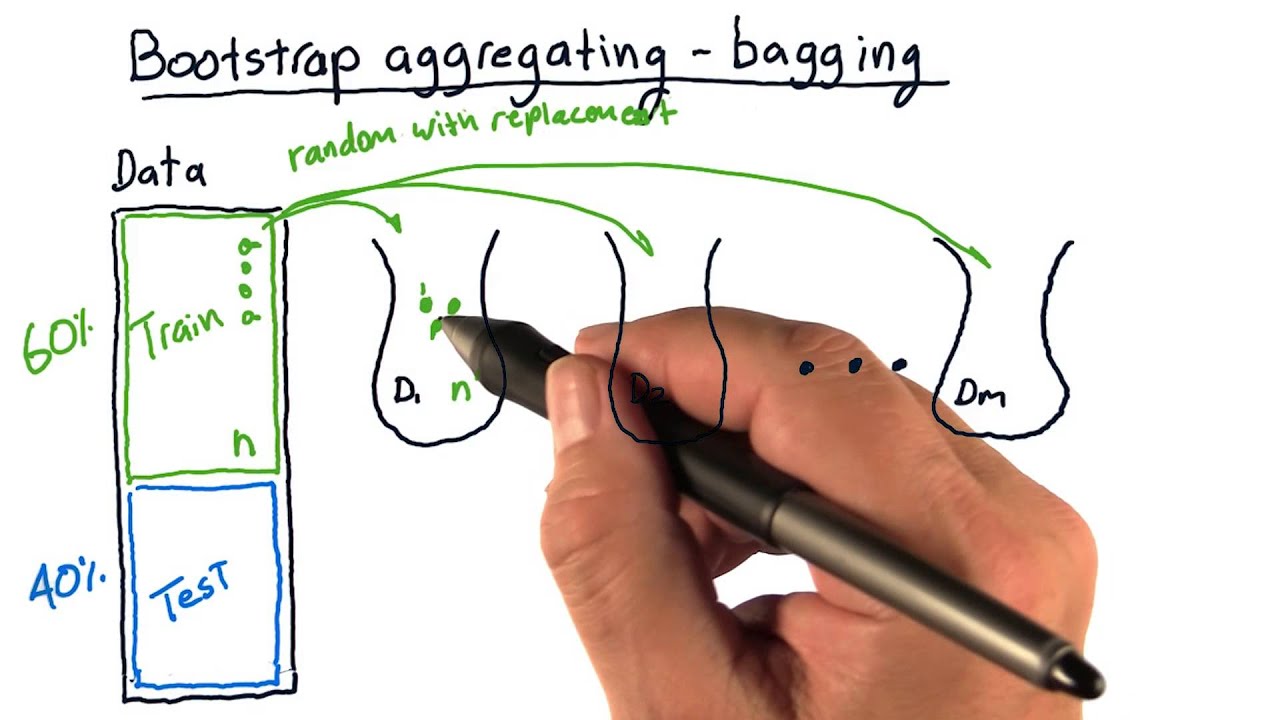

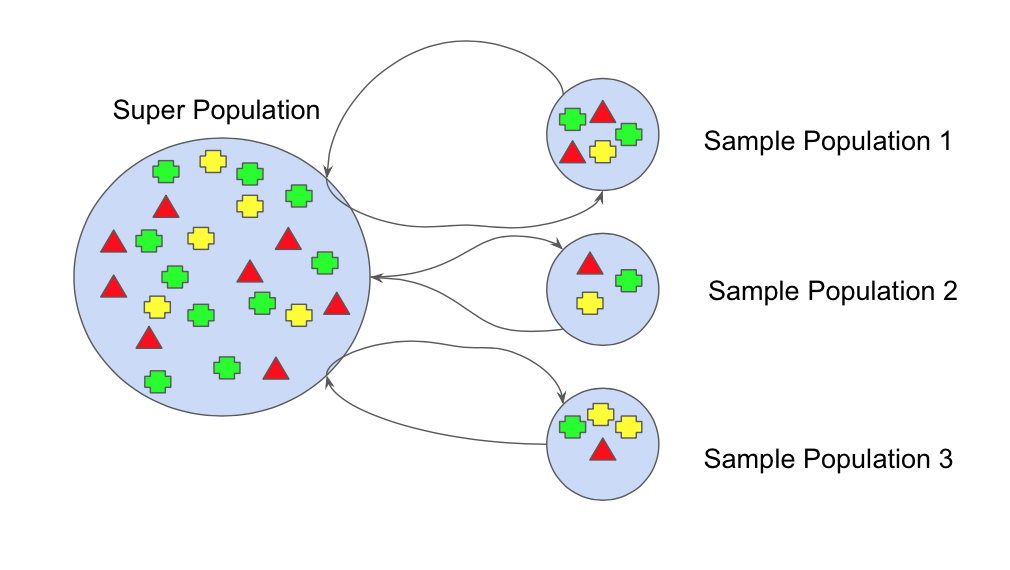

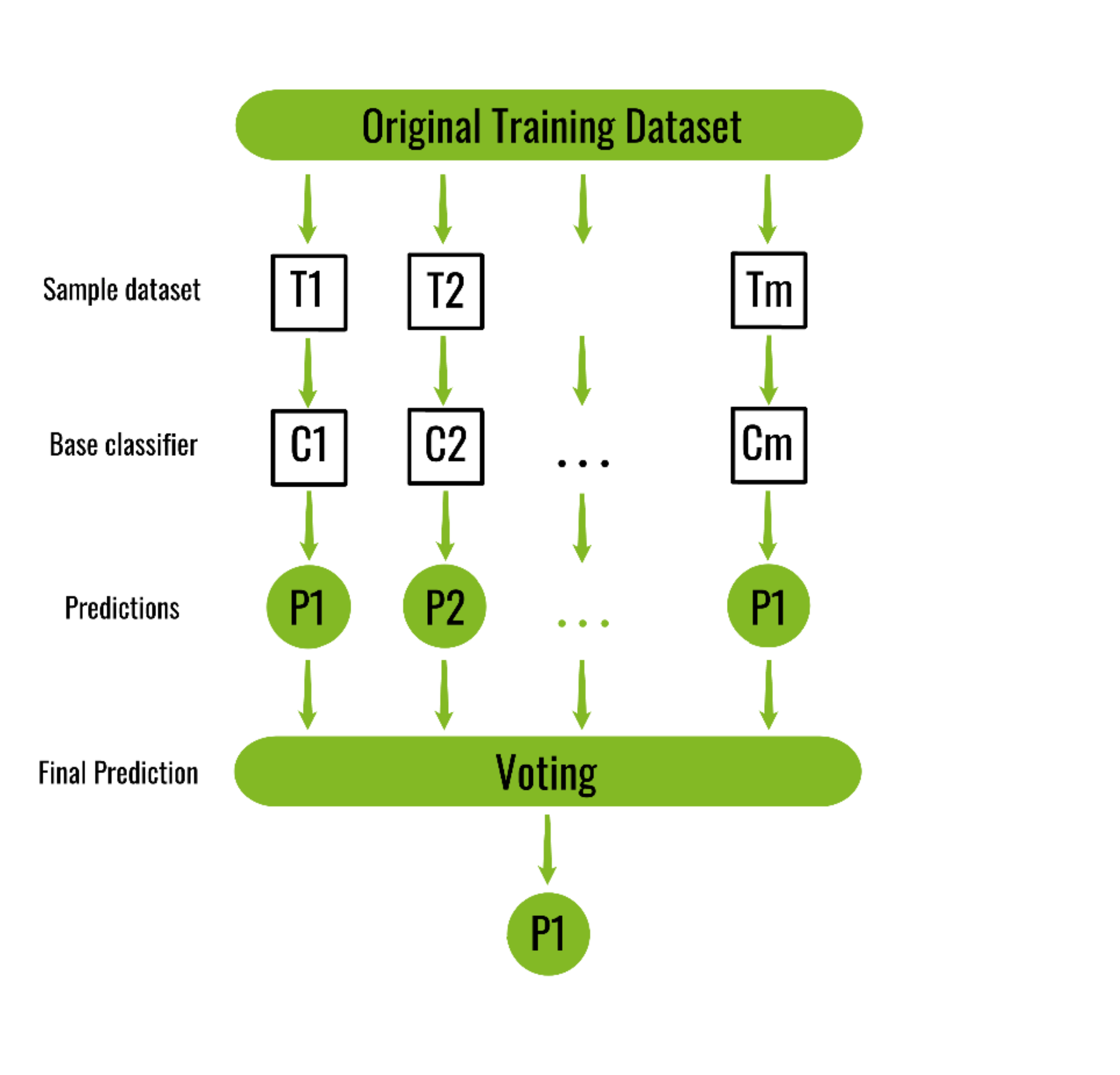

- Selection from Hands-On Machine Learning with Scikit-Learn Keras and TensorFlow 2nd Edition Book. Now even programmers who know close to nothing about this technology can use simple. In Section 242 we learned about bootstrapping as a resampling procedure which creates b new bootstrap samples by drawing samples with replacement of the original training data.

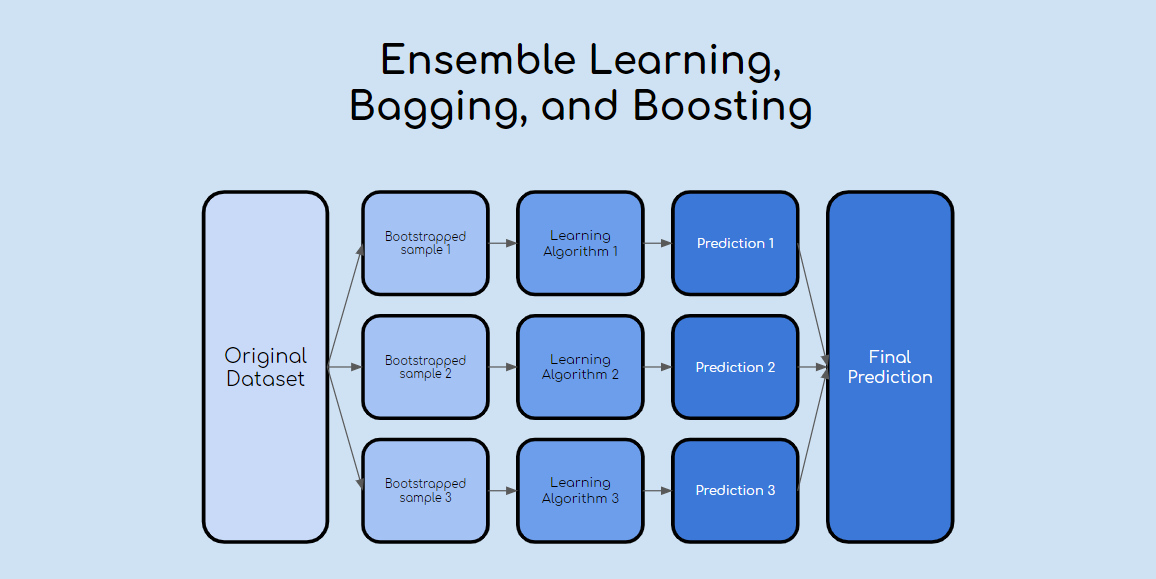

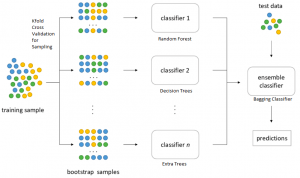

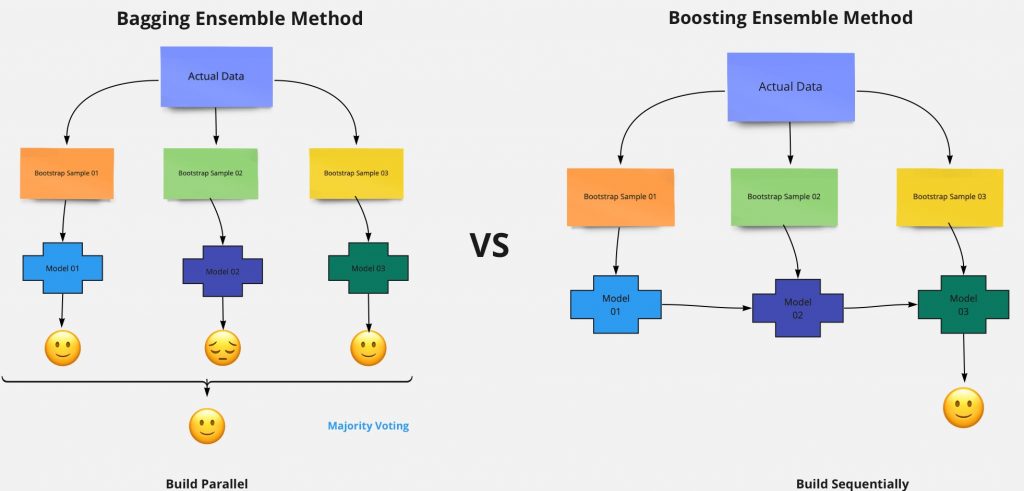

Possible but capable of mind-blowing achievements that no other Machine Learning ML technique could hope to match with the help of tremendous computing power and great amounts of data. Optimization is a big part of machine learning. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging.

Fast-forward 10 years and Machine Learning has conquered the industry. In this post you discovered gradient descent for machine learning. In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling.

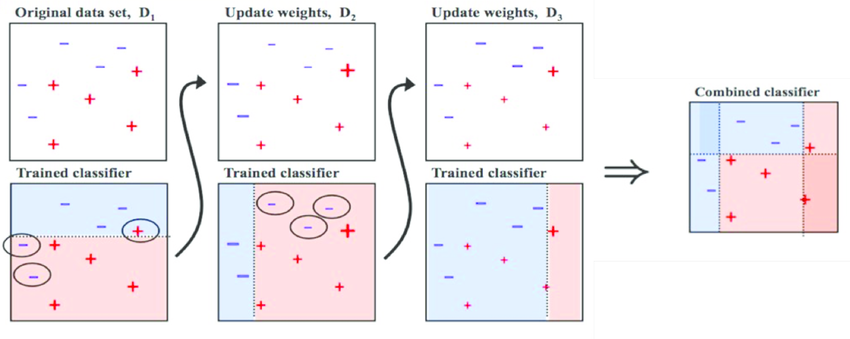

The bias-variance trade-off has a very significant impact on determining the complexity underfitting and overfitting for any Machine Learning model. This enthusiasm soon extended to many other areas of Machine Learning. Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regressionIt also reduces variance and helps to avoid overfittingAlthough it is usually applied to decision tree methods it can be used with any.

Bootstrap aggregating also called bagging is one of the first ensemble algorithms. It is now at. Machine learning ML is a field of inquiry devoted to understanding and building methods that learn that is methods that leverage data to improve performance on some set of tasks.

This library offers a function called bias_variance_decomp that we can use to calculate bias and variance. Introduction to Machine Learning Chapter 2. This chapter illustrates how we can use bootstrapping to create an ensemble of predictions.

Gradient descent is a simple optimization procedure that you can use with many machine learning algorithms. The above diagram represents four dart boards with points put on each dartboard. Notes Exercises and Jupyter notebooks Table of Contents A sampler of widgets and our pedagogy Online notes Chapter 1.

Lets put these concepts into practicewell calculate bias and variance using Python. After reading this post you will know about. This is one concept that affects all the supervised Machine Learning algorithms.

First-Order Optimization Techniques Chapter 4.

Bootstrap Aggregating Bagging Youtube

Ensemble Learning Bagging And Boosting Explained In 3 Minutes

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Bagging Classifier Python Code Example Data Analytics

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

Mathematics Behind Random Forest And Xgboost By Rana Singh Medium

Learn Ensemble Methods Used In Machine Learning

Guide To Ensemble Methods Bagging Vs Boosting

A Bagging Machine Learning Concepts

Bagging Classifier Instead Of Running Various Models On A By Pedro Meira Time To Work Medium

A Primer To Ensemble Learning Bagging And Boosting

Ml Bagging Classifier Geeksforgeeks

What Is Bagging In Machine Learning And How To Perform Bagging

Ensemble Learning Bagging Boosting Stacking And Cascading Classifiers In Machine Learning Using Sklearn And Mlextend Libraries By Saugata Paul Medium

Ensemble Learning Bagging Boosting Ensemble Learning Learning Techniques Deep Learning

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bootstrap Aggregating Wikiwand

Boosting And Bagging Explained With Examples By Sai Nikhilesh Kasturi The Startup Medium